Sia

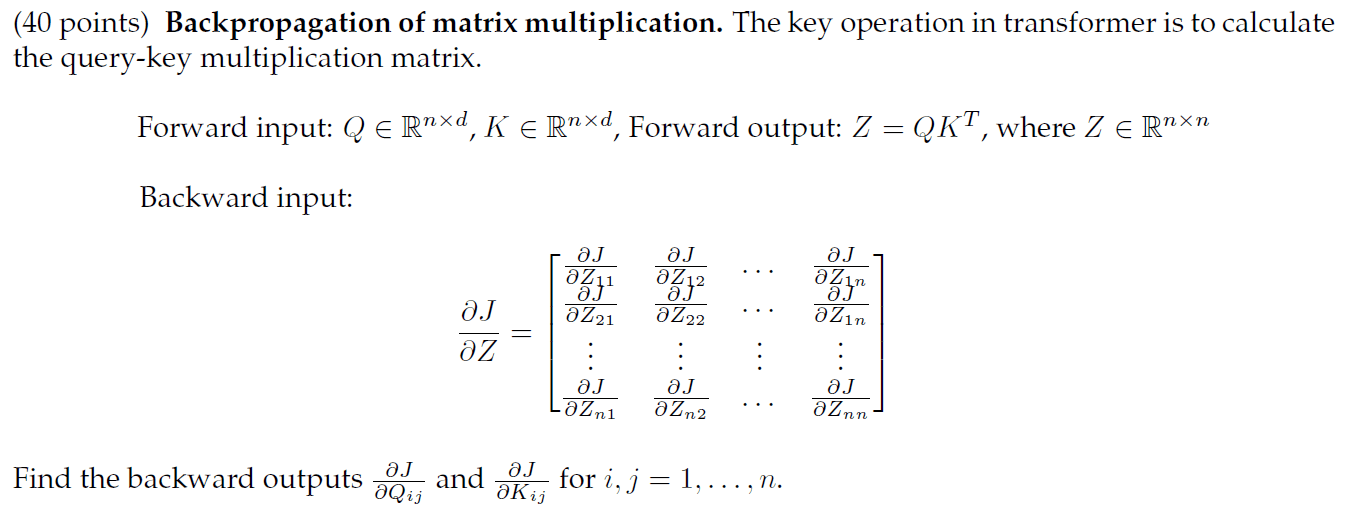

(40 points) Backpropagation of matrix multiplication. The key operation in transformer is to calculate

the query-key multiplication matrix.

Forward input: Q E Rnxd, K E Rnxd Forward output: Z = QKT, where Z E Rnxn

Backward input:

aJ

a.J

OZin

:

oz

aJ

aJ

aJ

OZn1

0Zn2

aZnn

aJ

aJ

and

for i,j =

Find the backward outputs

n.

,

aQij

SKij

Answer from Sia

Posted over 1 year ago

To solve the backpropagation problem for matrix multiplication in the context of transformers, we need to compute the gradients ∂J/∂Q and ∂J/∂K based on the forward output Z = QK^T.

The backward outputs can be derived using the chain rule of calculus.

The gradients can be computed as follows:

1. The forward output is given by .

2. The Jacobian matrix for the backward input is .

3. To find , we use the relation:

where is the matrix of keys.

4. To find , we use the relation:

where is the transpose of the query matrix.

Therefore, the backward outputs are given by the above equations for ranging from 1 to .

Not the question you are looking for? Ask here!

Enter question by text

Enter question by image

Unlock Smarter Learning with AskSia Super!

Join Super, our all-in-one AI solution that can greatly improve your learning efficiency.

30% higher accuracy than GPT-4o

Entire learning journey support

The most student-friendly features

Study Other Question